There’s been a lot of discussion by armchair analysts about various models being used to predict outcomes of COVID-19. The armchair analysts I’ve seen include a philosophy major and a PhD candidate with little experience in statistics, much less in modeling complex systems. In fact, the discussion coming from academia and their sycophants in the media further demonstrates just how deep the “deep state” runs. For those of us who have built spatial and statistical models, all of this discussion brings to mind George Box’s dictum; “All models are wrong, but some are useful.”… or useless as the case may be.

The problem with data driven models, especially when data is lacking, can be easily explained. First of all, in terms of helping decision makers make quality decisions; statistical hypothesis testing/data analysis is just one tool in a large tool box and it’s based on what we generally call reductionist theory. In short, the tool examines parts of a system (usually by estimating an average/mean) and then makes inferences to the whole system. The tool is usually quite good at testing hypotheses under carefully controlled experimental conditions. For example, the success of the pharmaceutical industry is, in part, due to the fact that they can design and implement controlled experiments in a laboratory. However, even under controlled experimental procedures, the tool has limitations and is subject to sampling error. In reality, the true mean (the true number or answer we are seeking) is unknowable because we cannot possibly measure everything or everybody (that’s why we need a model in the first place) and model estimates always have a certain amount of error.

Simple confidence intervals (CI) can provide good insight into the precision and reliability (usefulness) of the part estimated by reductionist models. For the COVID-19 models, the so called “news” appears to be using either the CI from one model or actual estimated values (i.e., means) from a variety of different models as a way of reporting a range of the “predicted” number of people who will contract or die from the disease (e.g., 60K to 2 million). Either way, the range in estimates are quite large and quite useless, at least in terms of helping decision makers make such dire decisions about our health, economy, and civil liberties. The armchair analysts’ descriptions about these estimates show how clueless they are of even the simplest of statistical interpretation. The fact is, when a model has a CI as wide as those reported, the primary conclusion is that the model is imprecise and unreliable. Likewise, if these wide ranges are coming from estimated means of several different models, it clearly indicates a lack of repeatability (i.e., again, a lack of precision and reliability). Either way, these types of results are an indication of bias in the data, which can come from many sources (e.g., not enough data, measurement error, reporting error, using too many variables, etc.). For the COVID-19 models, most of the data appears to come from large population centers like NY. This means the data sample is biased, which makes the entire analysis invalid for making any inferences outside of NY or, at best, areas with similar population density. It would be antithetical to the scientific method if such data were used to make decisions in, for example, Wyoming or rural Virginia. While these models can sometimes provide decision makers with useful information, the actual decisions that are being made during this crisis are far too important and complex to be based on such imprecise data. There are volumes of scientific literature that explain the limitations of reductionist methods, if the reader wishes to investigate this further.

Considering the limitations of this tool under controlled laboratory conditions, imagine what happens within more complex systems that encompass large areas, contain millions of people, and vary with time (e.g., seasonal or annual changes). In fact, when it comes to predicting outcomes within complex and adaptive and dynamic systems, where controlled experiments are not possible and where data is lacking and large amounts of uncertainty exist, the reductionists’ tool is NOT useful. This is one reason why climate change modeling has little credibility among real scientists and modelers. Researchers who speak as if their answers to such complex and uncertain problems are unquestionable and who politicize issues like COVID-19 (and climate change) are by definition pseudo-scientists. In fact, the scientific literature (including a Nobel Prize winner) shows that individual “experts” are no better than laymen at making quality decision within systems characterized by complexity and uncertainty. The pseudo-scientists want to hide this fact. They like to simplify reality by ignoring or hiding the tremendous amount of uncertainty inherent in these models. They do this for many reasons; it’s easier to explain cause/effect relationships, it’s easier to “predict” consequences (that’s why most of their predictions are wrong or always changing), and it’s easier to identify “victims” and “villains”. They accomplish this by first asking the wrong questions. For COVID-19, the relevant question is not, “how many people will die?” (a divisive and impossible question to answer), but rather, “what can we do to avoid, reduce, and mitigate this disease without destroying our economy and civil rights?” Secondly, pseudo-scientists hide and ignore the assumptions inherent in these models. The assumptions are the premise of any model; if the assumptions are violated or invalid, the entire model is invalid. Transparency is crucial to a useful model and for building trust among the public. In short, whether a model is useful or useless has more to do with a person’s values than science. The empirical evidence is clear; what’s really needed is good thinking by actual people (not technology) in order to identify and choose quality alternatives. Technology will not solve these issues and should only be used as aides/tools (and only if they are transparent and reliable as possible). What is needed and what the scientific method has always required (but is nowadays ignored) is what is called multiple working hypotheses. In Laymen’s terms, this simply means that we include experts and stakeholders with different perspectives, ideas, and experiences.

The solution and the type of modeling that is needed to make quality decisions (not just “predict” numbers) for the COVID-19 crisis is what we modelers call, participatory scenario modeling. This method uses Decision Science tools (like Bayesian networks and Multiple Objective Decision Analysis) that explicitly link data with the knowledge and opinions of a diverse mix of subject matter experts (SME). The method uses a systems (NOT a reductionist) approach and seeks to help the decision maker weigh the available options and alternatives. The steps are; frame the problem/question appropriately, develop quality alternatives, evaluate the alternatives, and plan accordingly (i.e., make the decision). The key is participation from a diverse set of SME’s from interdisciplinary backgrounds working together to build scenario models that help decision makers assess the decision options in terms of a probability of the possible outcomes. Certain models (like COVID-19) require a diverse set of experts, whereas climate change models require participation from stakeholders and experts. The participatory nature of the process makes assumptions more transparent, helps people better understand the issues, and builds trust among competing interests.

For the COVID-19 issue, we likely need a set of models for health/medical and economic decisions that augment final decision-support models that help the decision makers weigh their options. No experienced decision maker would (or should) rely on any one model or any one SME (especially if they come from the deep state) when making complex decisions with so much uncertainty and so much at stake. Pseudo-scientists only allow participation from SMEs that drink the Kool-Aide and agree with their agenda. In other words, they often rig the participatory models. I’m not saying this is occurring with COVID-19, but it is happening with climate change models and people are trying to politicize this current crisis. There is always a danger of cherry picking the SMEs. Let’s hope that is not happening during the COVID-19 crisis.

Science is a quest for Truth, not consensus. We can never know the Truth in the way that God does (He is God, we are people). The scientific method, if carried out with honor and integrity, seeks only to estimate and interpret God’s Truth as best we can, so that we may make wise decisions for ourselves, our families, our community, and our Country. In fact, the great scientists of the past 400 years who created and developed the scientific method and who by the way were deeply religious men, have always required the use of multiple working hypotheses. Some scientists like myself, have even called for making science itself more democratic (at least for environmental issues), which is why we are treated as heretics by the pseudo-scientists in academia. The solutions for many of the issues we face as a society, lies not in “black box” reductionist models or “apps” and algorithms developed by ivory tower eggheads and “Big Tech” programmers, but rather with real people (experts and stakeholders). We must participate and find solutions together, using technology as a tool, not a panacea. To those dogmatic, deep state pseudo-scientists in academia (and their lackeys in the “media” and Congress), I will end with a history lesson from Francis Bacon, the founder of the scientific method. Bacon himself did not intend the scientific method (“the interpretation of nature”, as he called it) to be the whole truth, but rather only one part of it:

Let there be therefore (and may it be for the benefit of both) two streams and two dispensations of knowledge, and in like manner two tribes or kindreds of students in philosophy—tribes not hostile or alien to each other, but bound together by mutual services; let there in short be one method for the cultivation, another for the invention, of knowledge. (Francis Bacon 1620).

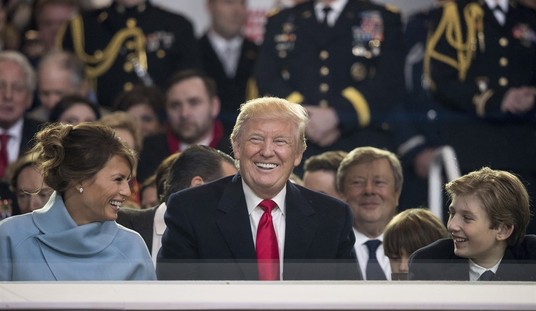

Biography: Jon McCloskey is a U.S. Navy veteran who worked his way through college and earned his Ph.D. in Ecology and Environmental Science in 2006. He has minors in Statistics and Remote Sensing/GIS and is the author of numerous peer-reviewed scientific research articles. Dr. McCloskey has created several innovative spatial and statistical models and decision-support tools for a wide variety of professional SMEs outside of Environmental Science. Dr. McCloskey is despised by the pseudo-scientists in the Environmental “profession” for his refusal to drink the Kool-Aide and keep his mouth shut…and because he supports President Trump (they really hate that).

Join the conversation as a VIP Member