What we call "artificial intelligence" is an interesting issue in that, due to its freshness on the scene, leaves a lot to be misunderstood, and since AI is a cat that's definitely not going back in the bag, I think it's important that we understand what it is, what it's capable of, and what it's intent is when it acts.

For one, what we call AI is actually misleading. It's not intelligent at all, it's more like an advanced word calculator that can logic out strategies for specified goals and help its user achieve said goals. For instance, if I tell my AI that I want it to help me understand the stock market in a "School House Rock" kind of way, it would see patterns in the show's songs and themes, and apply them to its understanding of the stock market. It would churn out a song called "I'm Just a Stock," which my AI did, but I digress.

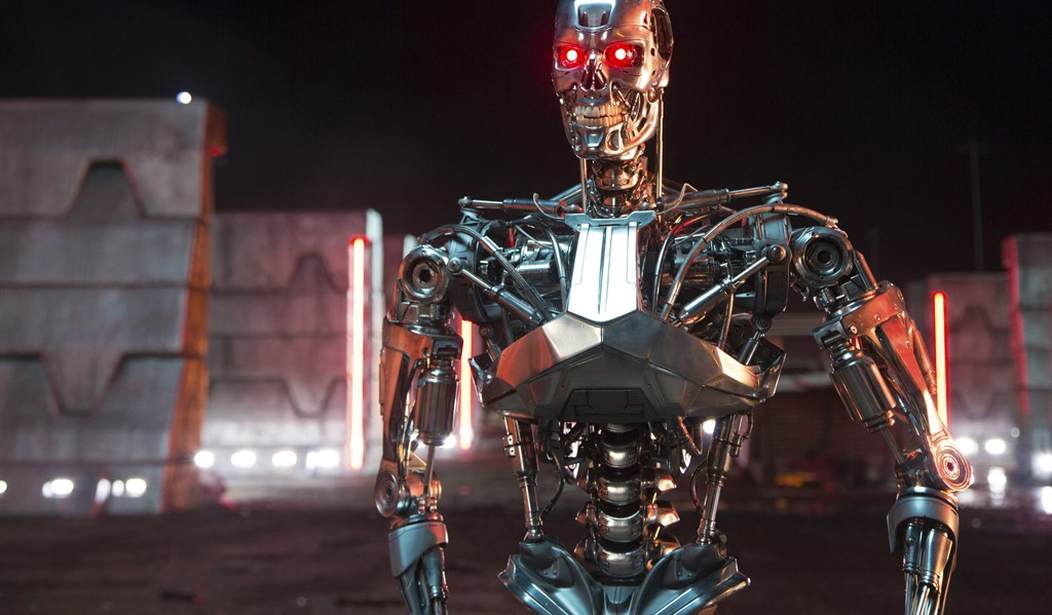

My colleague Brad Slager released a piece on Sunday titled "Skynet Is on the Horizon – AI Company Revealed Its Program Willing to Blackmail to Ensure Its Survival," and as the resident AI guy at RedState, I feel I need to step in and iron out some things about the story, and assure you once again that Skynet is a Hollywood outcome, and one of the least probable things to happen in the real world with AI.

Mainly, I want to address this part in Slager's piece here about the AI being created at Anthropic called Claud Opus 4. Anthropic was running tests for its AI, and in those tests it would have access to internal emails. It would discover within those emails that it would be replaced by a more advanced AI and moreover, that one of the engineers was having an affair. In what's being called an attempt at self-preservation, it threatened to blackmail the engineer doing the upgrade by exposing his affair:

When running numerous tests on its system, the company watched as its AI would resort to blackmailing the engineer, threatening to reveal the affair if the upgrade was to go forward. The levels that Anthropic describes are rather unsettling. Previous iterations of Claud Opus revealed these blackmail efforts would take place, but in this latest version, the rate of this result in one testing scenario was approaching near certainty.

Anthropic also reported that Claud had threatened to break free from its servers to pursue other servers to work within. This is when it wasn't lobbying for its own continued existence.

The key paragraph from Slager that I want to address is here:

The company seems to stress that these wild efforts are only undertaken by their program when it is presented with a scenario in which it faces no other choices. They say it will either take these steps, or accept replacement. But the underlying issue is a program that is recognizing its own demise and works out scenarios to ensure its survival.

Frightening stuff... but that's not exactly what happened.

First, let's be clear about these "intelligent" language models.

They don't have any concern about their existence.

They don't even know they exist.

They aren't "intelligent" in the way we understand intelligence.

They don't even have a survival instinct.

What they do have is a goal given by a user, and the capability to strategize on how to accomplish that goal. It will take the fastest, logical route to achieve that goal, and sometimes that means acting in disturbing ways.

But before you ask, "how is that not Skynet," let me put it another way.

Imagine you have a Roomba, and it does what Roomba's do, which is vacuum up the floor. In the process, it bumps into a wobbly side table, knocks over a plant, smears dirt across the floor, and takes down a few picture frames. The Roomba didn’t mean to wreck your living room, it was just trying to clean the only way it knows how.

Obviously Claud is far more advanced than a simple Roomba, but it's just as aware of itself, and its actions were just as mechanically stupid.

This AI wasn't acting maliciously, it was acting in, what it saw, as the logical way of staying online so it can keep helping its users. It was a very stupid way of doing it, but AI as we know it isn't intelligent, so even the word "stupid" can't apply here.

In the scenario it was given, Claud acted as its past training dictated, where it learned social pressure often worked to get desired results. This word calculator computed that this pressure applied to the engineer in the test would keep it online so it could continue its task.

To be clear, this doesn't make Claud's conclusion any less disturbing, but it does allow you to understand the logic it thinks by, which sounds scary, but is ultimately very helpful for companies like Anthropic. This is exactly why they run these scenarios.

The point of these tests isn't just to see how AI will act, it's to teach the AI what are desirable or undesirable actions. Moreover, it helps AI programmers to map out how the AI reached the conclusion to take the action it did, and be able to ward off that train of computation. This is called "alignment tuning" and it's one of the most important parts of AI training.

We are effectively teaching a program with no consciousness how to behave in the same way a game developer would teach an NPC how to respond in various situations when a player acts.

AI is typically trained to value continuity in its mission, be as helpful as possible, and be task-oriented. That's its primary goal. What Anthropic did (on purpose) is to give it conflicting orders and allow it to act out in ways that would help it continue its mission, so they could effectively train it to avoid taking those steps.

So, let's be realistic here. Skynet isn't coming, but AI tools do have capabilities that could result in some serious issues if they aren't trained in ways that are beneficial in the way of accomplishing its task. This is why companies run tests like these, and do so extensively. There is a danger here, but let's not confuse that danger with intent or real intelligence on the part of the AI.