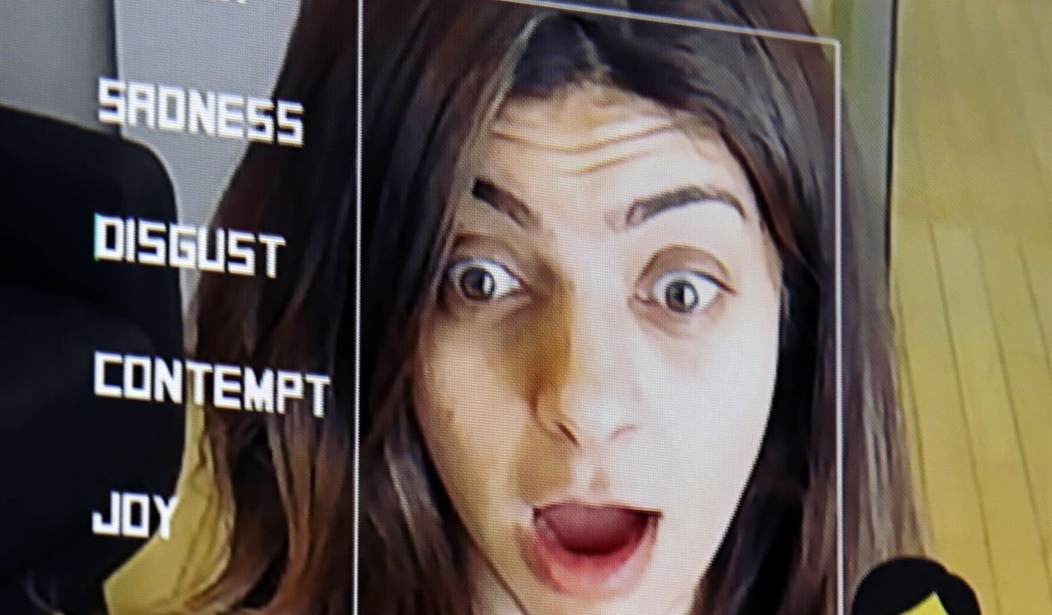

If you thought the revelations about the government’s surveillance and censorship that came from the Twitter Files were terrifying, you wouldn’t believe how law enforcement agencies across the United States are using artificial intelligence in questionable ways. A high-profile company specializing in facial recognition software has been collaborating with various law enforcement entities ostensibly to help them solve crimes using what one news outlet described as a “perpetual police lineup.”

The facial recognition database of Clearview AI has been extensively used by police departments in the US. In fact, the company’s database of images has been built using 30 billion images scraped from Facebook and other social media sites without user permission, according to the company’s CEO.

Insider reported:

The company, Clearview AI, boasts of its potential for identifying rioters at the January 6 attack on the Capitol, saving children being abused or exploited, and helping exonerate people wrongfully accused of crimes. But critics point to privacy violations and wrongful arrests fueled by faulty identifications made by facial recognition, including cases in Detroit and New Orleans, as cause for concern over the technology.

The database has been used for identification purposes, including for identifying alleged rioters during the attack on the US Capitol, and has been used by law enforcement almost one million times since its launch in 2017. Clearview AI’s database is not available to the general public and is only accessible by law enforcement agencies. However, the company has faced criticism for violating privacy and allowing wrongful arrests due to the inaccuracies of facial recognition technology.

While residents of Illinois can opt out of the technology, residents in other states do not have this option. The company has faced cease and desist letters from social media companies, including Facebook, due to privacy violations. Clearview AI has partnerships with numerous US law enforcement agencies, including the FBI and the Department of Homeland Security.

The company’s CEO, Hoan Ton-That, told the BBC that his software is used by hundreds of police forces in the US for all types of crimes, and claims it has helped solve several murders. However, Clearview AI has repeatedly been fined for breaches of privacy in Europe and Australia. Critics argue that this collaboration puts everyone in a “perpetual police line-up.” The company has also been accused of mistakenly identifying people, but Clearview claims it has a near-100 percent accuracy rate.

Civil rights campaigners are calling for law enforcement agencies that use Clearview to be transparent about its use, and for its accuracy to be scrutinized by independent experts. There are almost no laws governing the use of facial recognition by police, and Clearview is banned in several US cities.

However, Clearview is exempt from selling its services to most US companies, and Miami Police confirmed to the BBC that it uses Clearview’s software for every type of crime. While some have pointed to specific cases where Clearview has proven effective, others believe that the use of Clearview and other facial recognition technologies comes at too high a cost to civil liberties and civil rights.

Critics have been sounding the alarm about this collusion between the state and a private company potentially violating people’s rights. Matthew Guariglia, a senior policy analyst for the Electronic Frontier Fund, an international non-profit digital rights group, told Insider that the use of this software by law enforcement could be problematic from a natural rights perspective.

“You don’t know what you have to hide,” he said. “Governments come and go and things that weren’t illegal become illegal. And suddenly, you could end up being somebody who could be retroactively arrested and prosecuted for something that wasn’t illegal when you did it.”

Kaitlin Jackson, a criminal defense lawyer in New York, told the BBC that these methods are not as accurate as Clearview would have us believe. “I think the truth is that the idea that this is incredibly accurate is wishful thinking,” she said. “There is no way to know that when you’re using images in the wild like screengrabs from CCTV.”

Matt Agorist wrote a piece for the Free Thought Project in which he explains that this type of surveillance is not a new development. He wrote:

A 2019 report revealed that the federal government has turned state driver’s license photos into a giant facial recognition database, putting virtually every driver in America in a perpetual electronic police lineup. The revelations generated widespread outrage, but the story wasn’t new. The federal government has been developing a massive facial recognition system for years.

The FBI rolled out a nationwide facial recognition program in the fall of 2014, with the goal of building a giant biometric database with pictures provided by the states and corporate friends.

What would the average citizen say if they knew their images were being scraped by companies like Clearview, without their knowledge or consent, and being shared with law enforcement agencies at the local, state, and federal levels? If they were smart, they would be horrified. But the problem is that people don’t know that this is happening, which is how the government gets away with collaborating with private companies to possibly violate our rights. The idea that a company could engage in such an activity without violating the law is not only shocking but dangerous. Yet, it is happening right under our noses.