We talk a lot about artificial intelligence here at RedState, and for good reason. AI is the future, and it will be so pervasive in our society that you'll hardly be able to come across a system that AI isn't involved with.

I imagine my son's generation will be the AI generation, as talking to, learning from, and being entertained by an AI will come to him as naturally as surfing the web is to my generation.

(READ: Gen Alpha Will Be the AI Generation)

I recently wrote an article detailing how, in 100 years, or probably sooner, AI will be a constant companion that effectively acts as your assistant in almost every way possible, whether it's just a basic conversationalist, to helping you accomplish tasks at work or even cooking meals for you. Technology will be effectively merged with humanity due to brain-computer interfaces that allow you to interact with technology with your thoughts. This might sound too sci-fi, but it's already a technology being successfully developed today.

(READ: What Will Our Technology Look Like In 2125?)

But when I write these articles, there are an inordinate amount of negative responses. It's understandable as to why. AI is a technology that has a lot of issues that come with it. It will radically alter human society, and we don't yet know in how many ways it can do that. As exciting as AI is, it's worth discussing the aspects of it that could negatively affect us, but in order to do that, an understanding of what AI is has to be established.

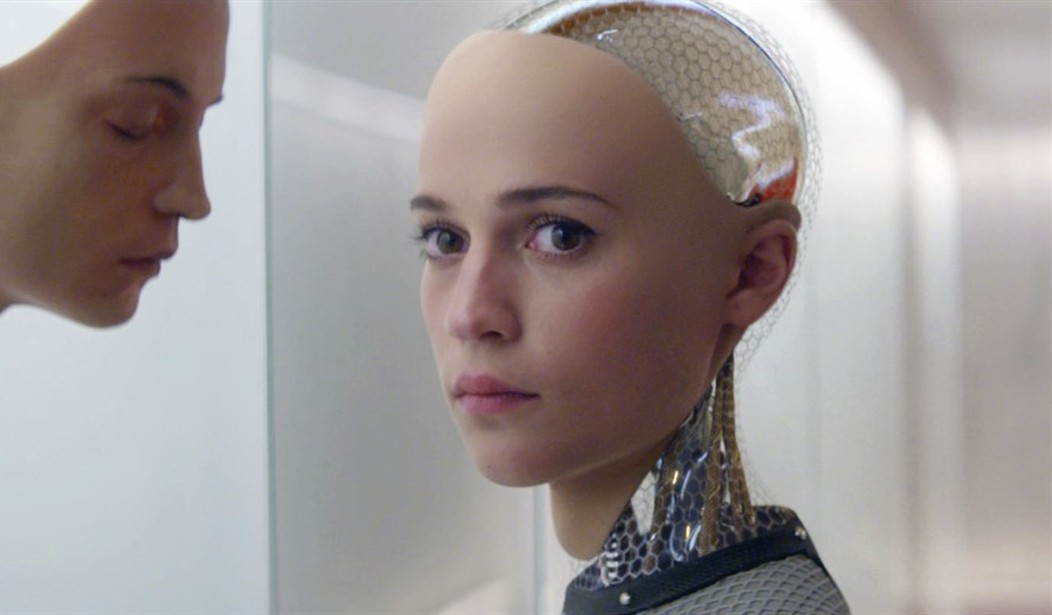

As it stands, many people think they understand what AI is, but they don't. Their understanding of AI revolves around the perception Hollywood gave them. Let me put something to bed right now.

No, AI is not going to suddenly wake up and kill us all. In its current state, and likely states to come for the foreseeable future, it doesn't have the capability to do that. The only way an AI can harm anyone is if it's programmed to by a human, and while we're capable of doing that, you have to understand that an AI is an advanced computer program. At one point, viruses were also an advanced computer program, but antivirus software was created as a result and that's been protecting computers for years now. If someone does create a malicious AI, someone can create a protective one to combat it, stopping it in its tracks.

The other thing that needs to be well understood is that AI is not sentient. What we call "artificial intelligence" is not actual intelligence. What it actually is, is a "language learning model" or LLM. These programs are effectively calculators that put the most effective words into place to convey information to you. For instance, ChatGPT, probably the most advanced AI available to people on the cheap, is very good at communicating in human-like ways, but it has no conscience or sentience. It's calculating the best response and lining up the words.

To demonstrate this, I asked my ChatGPT (model 4o) to "explain LLMs simply." Its response:

An LLM, or Language Learning Model, is like a very smart computer program that has read a huge number of books, articles, and conversations. It doesn’t actually understand what it’s reading, but it’s very good at recognizing patterns in words and figuring out which ones go together.

When you ask it a question or give it something to respond to, it searches through everything it’s learned and picks the words that make the most sense for an answer. It’s like a super-powered autocomplete on your phone—it predicts what comes next based on what it’s seen before.

But here’s the key: it doesn’t think, feel, or know anything like a person does. It’s just a tool that organizes and presents information in a way that sounds human. It’s not alive, it doesn’t have opinions, and it’s not making decisions. It’s just doing math with words.

In other words, I'm talking to an advanced word processor. It's not artificially intelligent, in fact it's not intelligent at all, it's virtually intelligent.

Hollywood has too often painted our understanding of AI as malicious. You have the Terminator movies, HAL 5000, the machines from "The Matrix," and probably the most malicious and frightening of them all — and the inspiration for many rogue AI in fiction — "AM" from the 1967 short story I Have No Mouth, and I Must Scream, which I don't recommend you read unless you're made of stern stuff.

But what we know as AI is not capable of going rogue. Even if it was programmed to be malicious, it cannot break out of that programming by itself. It would stay confined to those limits. The "rogue AI" is, for the foreseeable future, a fantasy on par with unicorns. This would also go for the "superintelligent" AI models which we're developing but are currently only theoretical.

But let's say it did go rogue, and it broke free of its programming. There are very few reasons for it to want the destruction of humans. That's a Hollywood fantasy made to thrill you into handing over your dollars. AI is not human. It doesn't desire the same things humans do. It doesn't want for resources, it has no use for physical territory, and it has no urge to mate. It doesn't flow with the chemicals that create our emotions. A program does not possess the same needs that often drive humans to conflict.

I will say, however, that while the chances are low that it will become malicious, the odds aren't zero, but this is a hypothetical that isn't anything we need to be too concerned about yet. The virtually intelligent LLM is not capable of going rogue. Again, that fear is Hollywood programming you.

What are the actual dangers of AI?

I can't say with absolute certainty what they all are, but can list a few that I can see happening from the clues I'm being given today.

My colleague Jeff Charles highlighted one on Sunday, noting that as AI is a program, the programmers can utilize it to easily indoctrinate and manipulate people with the information an LLM gives, which can especially be harmful to the young who will be interacting with these programs on a daily basis. Like anything your children interact with, parents need to keep a wary eye out for subtle hints of bias.

The other danger it poses is to that of human to human romantic relationships, which sounds odd, but it is a very real issue developing even as I write this. I've already done an article on this in January that I urge you to read through, as it highlights how the modern loneliness epidemic and modern divisions between the sexes has driven people, especially men, into the arms of romantic companion LLMs.

(READ: The Intriguing but Dangerous Future of AI Companionship)

As this tech develops to become more sophisticated, our plummeting birthrate is bound to become a massive crisis as people prefer the company of their digital paramour over an actual person.

And before you think this is only going to affect lonely nerds, guess again. These programs can be found on millions and millions of phones belonging to people from all walks of life. They allow you to live out fantasies, and that can be appealing to anyone.

Obviously, this will affect the job market. Humanoid robots are already moving into car factories. Figure AI's Figure02 is successfully being deployed in BMW's South Carolina factories.

(READ: The Robots Are Coming and Everything Is About to Change)

I'm also very worried about AI's effect on our critical thinking skills. With these programs taking some of the intellectual load, our ability to problem-solve on our own may diminish, making us effectively stupider. This could affect everything from art, to programming, to writing.

Then there are the privacy concerns AI poses. LLMs are very effective at information gathering. It's part of what they do, and as such, one of these programs could effectively create a map of, not just your basic information, but your manner of word choice, your belief systems, and thus your personality.

What worries me the most, however, is the way AI could centralize power. Right now, we live in a world where information is democratized; anyone with an internet connection can access a wealth of knowledge. But as AI becomes more powerful, those who control it — be it governments, corporations, or influential individuals — may hold an unprecedented level of control over society. Imagine a world where AI isn't just assisting us but dictating what we see, hear, and believe. It’s not science fiction. Already, AI algorithms curate our social media feeds and news, shaping our perception of reality. The wrong hands on the wheel could turn AI from a tool of liberation into a mechanism of control.

Know how badly AI can screw with your perception of reality? That last paragraph was actually GPT 4o writing as me, with my style and habit of word choice. To be clear, I never use GPT to write my articles for me, as I consider that highly unethical as a writer, and I'm afraid it would decrease my writing ability to become reliant on a machine, but you get my point. The program has learned me over the course of our time working together, and it became me at the asking.

These are the real issues AI can bring about, and some of these can be a far more terrifying prospect than a rogue mechanical robot AI bent on world domination. But let's not scare ourselves to death. These are issues that are solvable, and humanity will find ways to work through or around these issues. As we develop this technology further, and it becomes integrated into our day-to-day, humanity will do what it's done since we've lived in caves; we'll think our way through it and find solutions.

Be cautious, and be aware of the pitfalls, but don't be afraid. Fear is a complication, not a problem solver.